Kaggle is a website geared towards teaching data science through

free online tutorials, shared notebooks and datasets, and competitions. I first heard about this resource through

the UC Berkeley Data Analytics bootcamp, and much of the coursework for that bootcamp was drawn from publicly-available datasets on

Kaggle. In addition to thousands of free, easily accessible datasets to analyze and create ML models for, there are also introductory classes

that cover basic to advanced data science and ML concepts like data cleaning, feature engineering, game AIs, and neural networks, as well as

basic programming skills in Python and pandas. You can check out these courses here.

In addition to free, interactive, online courses, you can also upload your own datasets to the website to share with others

who might want to use your data. So far, I have shared two datasets - one for the cryptocurrency ETL project listed above,

and one for a comprehensive Magic: The Gathering (MTG) card catalog available here.

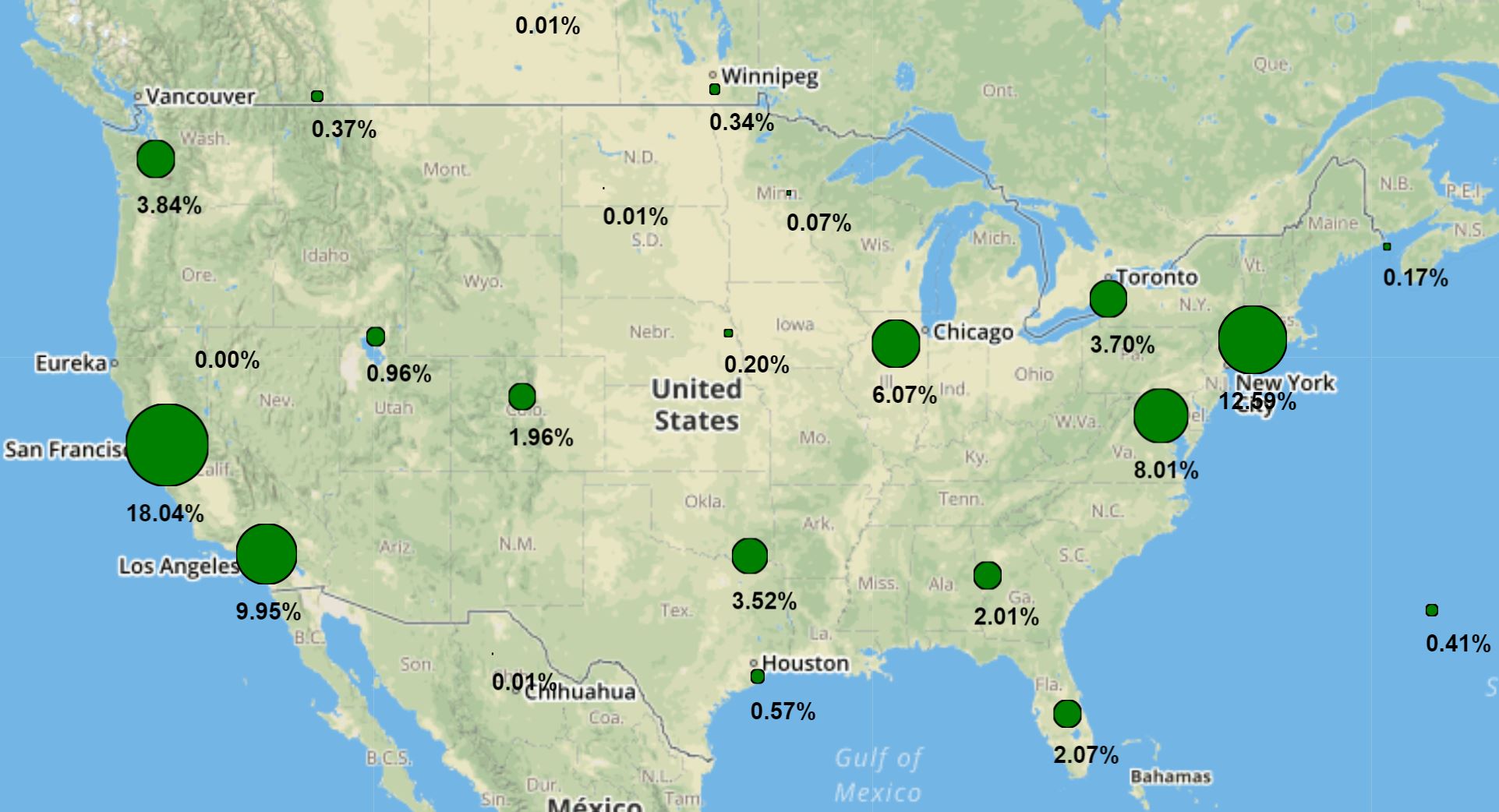

The MTG card catalog dataset has been more popular by far, so I would expect a lot of the Kaggle users to be younger/students who might not have as much

use for cryptocurrency pricing data because they might not be working and have funds to invest.

The website also hosts online data science and ML competitions, which can be used as educational tools to learn new data science and ML techniques, or

as a way to make some money because some of the competitions have cash or other rewards for top-ranked submissions. The competition is usually stiff for

the cash competitions, and people with all levels of experience and backgrounds can compete, so I have not really participated in any non-educational

competitions at this time. The competitions can have difficult datasets from real companies, and are sometimes sponsored by those companies to get working solutions

to data science problems they are facing without having to hire full-time data scientists (as an "outsourcing" sort of solution). Sometimes these competitions can

result in internship/job offers, but they are probably more competitive than the regular hiring channels. Also, the point differences between the top-ranked teams can often

be slight, so there is also the issue of diminishing returns with some of the competitions if you are looking to win and make money vs. what a real-world solution would be.

Finally, if you want to see some examples of the introductory online course certificates they give you when you complete one of their courses,

you can check out my Intro to AI Ethics certificate,

Intro to Deep Learning certificate, and

Intro to Game AI and Reinforcement Learning certificate!

These certificates are not too hard to get since they only take ~4-8 hours of work each, but they sure are fun to look at!